Transformer models have emerged as a key architecture in machine learning and natural language processing (NLP). Transformers, which were first introduced in the research "Attention is All You Need" by Vaswani et al., have replaced earlier sequential models with remarkable success in a variety of tasks.

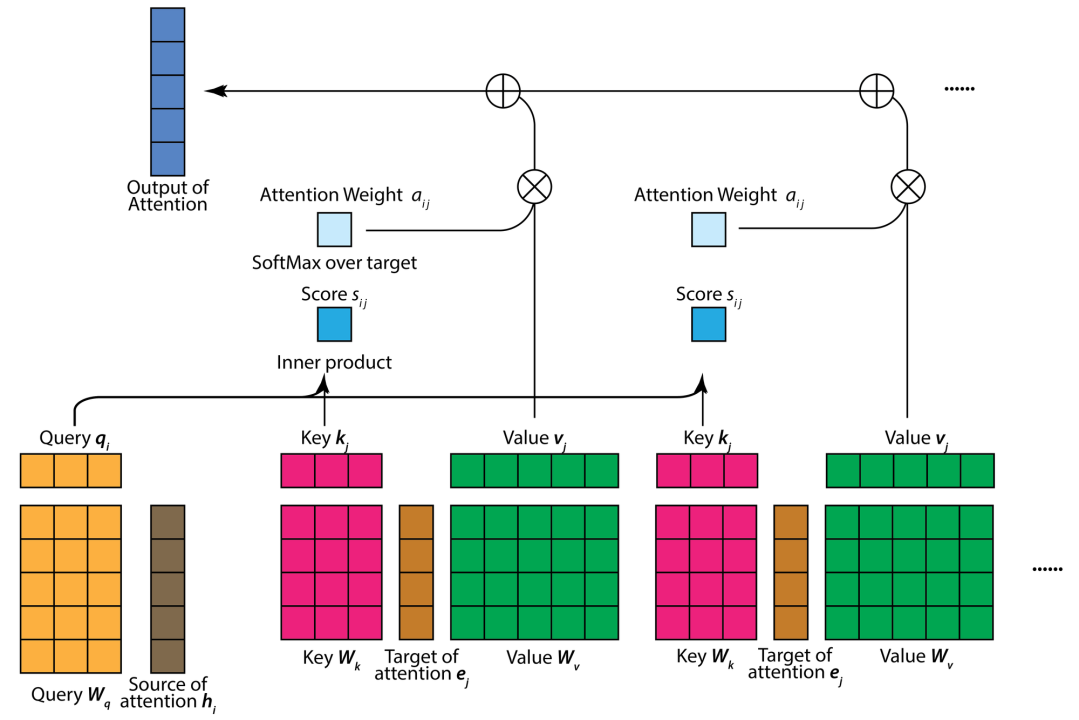

A Transformer model's attention mechanism is a crucial invention that allows, Click below link to read more: https://www.linkedin.com/pulse/beyond-words-future-machine-learning-transformer-models-uday-k-ddeyc/?trackingId=qM1oYwAcTO%2BRf2jIkAxzTA%3D%3D